Ryan Cummings and Ernie Tedeschi have a very interesting article in BriefingBook today which casts new light on the disjuncture between measured sentiment and conventional macroeconomic indicators. Cummings and Tedeschi document how the move to online sampling has altered the characteristics of the University of Michigan Economic Sentiment series.

…we believe online respondents are resulting in the level of the overall sentiment and current conditions indices being meaningfully lower, making more recent UMich data points inconsistent with pre-April 2024 data points. Specifically, we use a simple statistical model to estimate that the effect of the methodological switch from phone to online is currently resulting in sentiment being 8.9 index points –or more than 11 percent–lower than it would be if interviews were still collected through the phone .

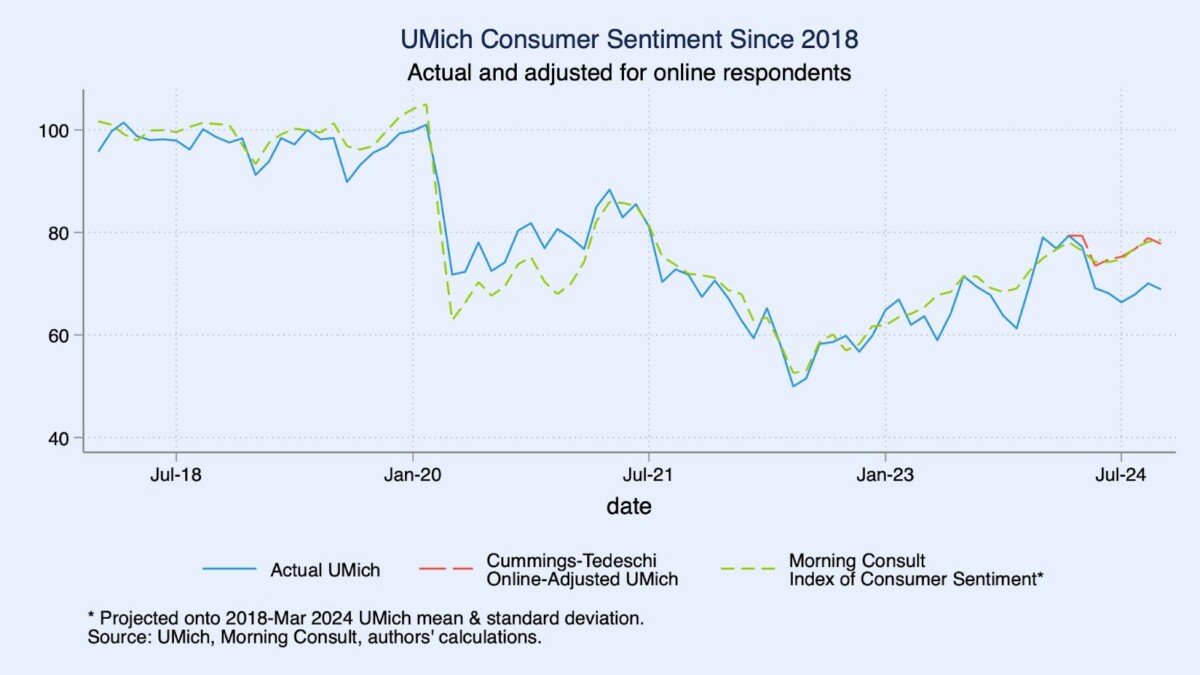

To demonstrate that the switch to online surveying has imparted a structural break in the UMich series, they compare against the Morning Consult series.

Source: Cummings and Tedeschi (2024).

The Morning Consult survey has been online, so it serves as a control. Hence, the key reason for the shift in measured sentiment.

A cursory look at the weighted age distribution of telephone and online assignments suggests that, no, they were not random in practice. For example, online respondents were more likely to be older in those transition months, which might affect their sentiment responses. …

A more formal multilevel logit model–which measures the likelihood of an individual being in a certain age cohort after accounting for other demographic factors–confirms this difference. Respondents 65+ for example had a 52 percent chance of being in the online group in April-June, which is more than twice as likely as respondents 18-24, and this difference is statistically significant…

I don’t know how the greater presence of older respondents interacts with the partisan divide examined in this post (are Republican/lean Republican voters older than corresponding Democratic/lean Democratic respondents?)

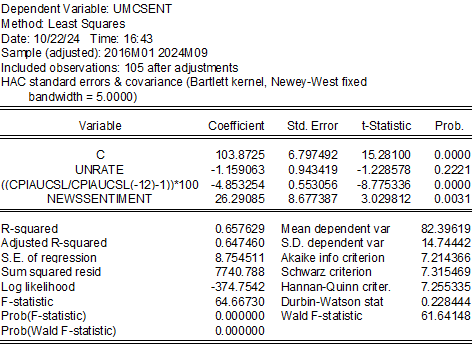

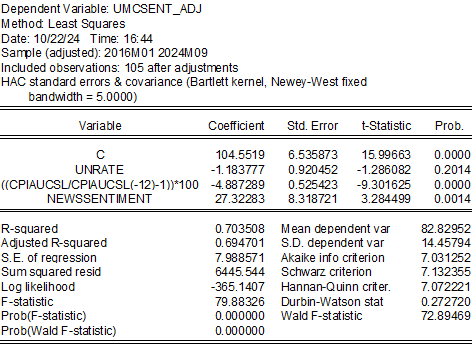

What I can say is that the adjusted Cummings-Tedeschi series exhibits less evidence of a structural break than the official UMichigan series, when using as regressors unemployment, y/y CPI inflation and the SF Fed news sentiment index.

Consider these two regressions, first with the official series, and the second with the Cummings-Tedeschi adjusted series:

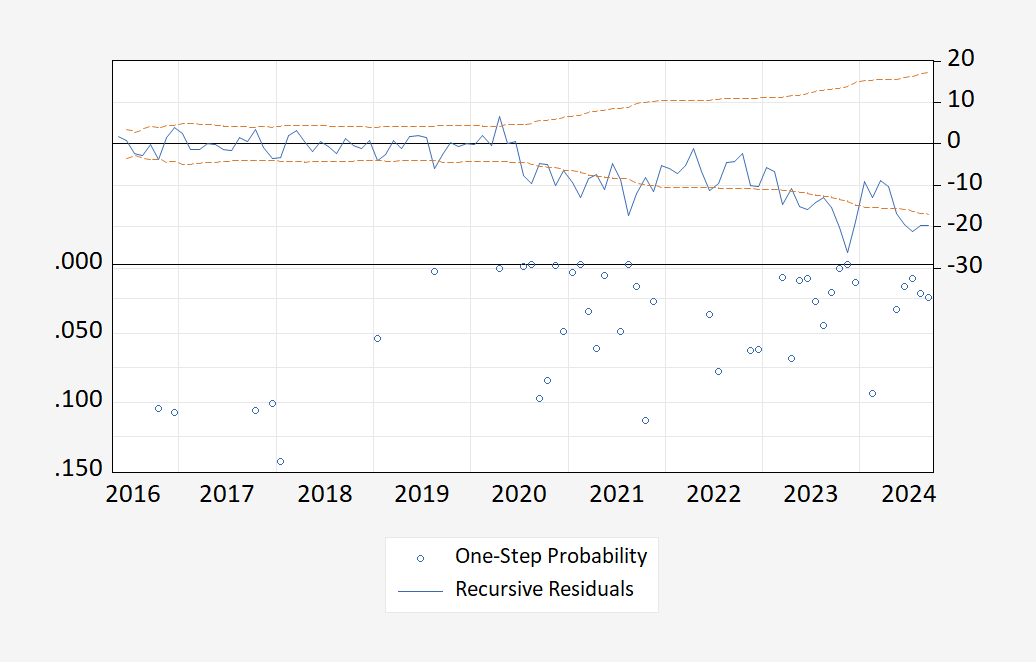

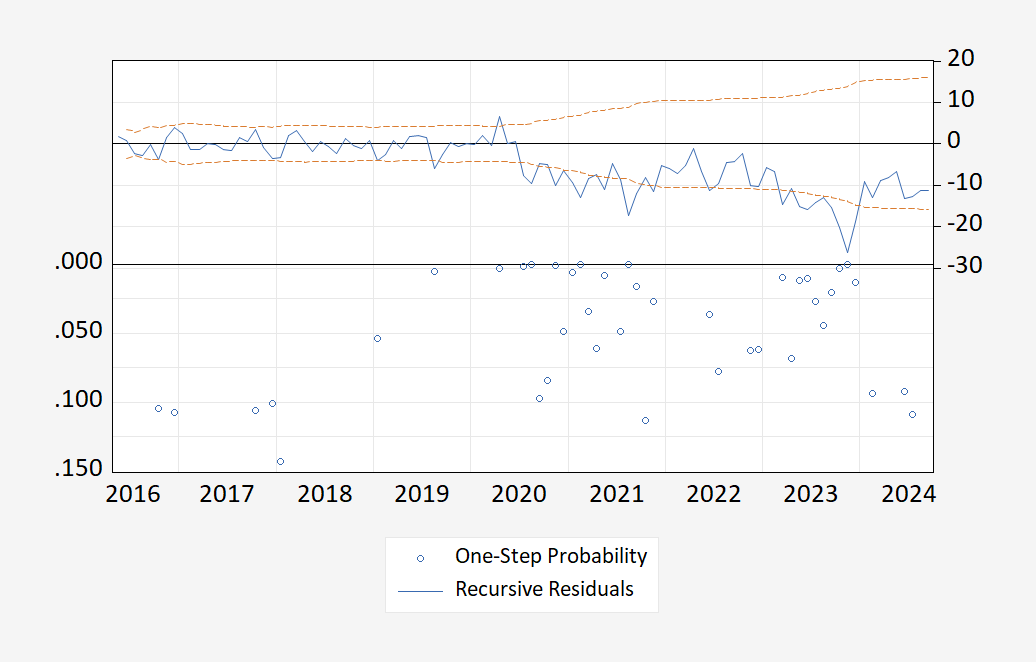

Now consider the respective recursive 1-step ahead Chow tests for stability.

Note that while both regressions exhibit instability around 2020 and 2023, the Cummings-Tedeschi adjusted series exhibits no structural break in 2024 around the switch to online polling.

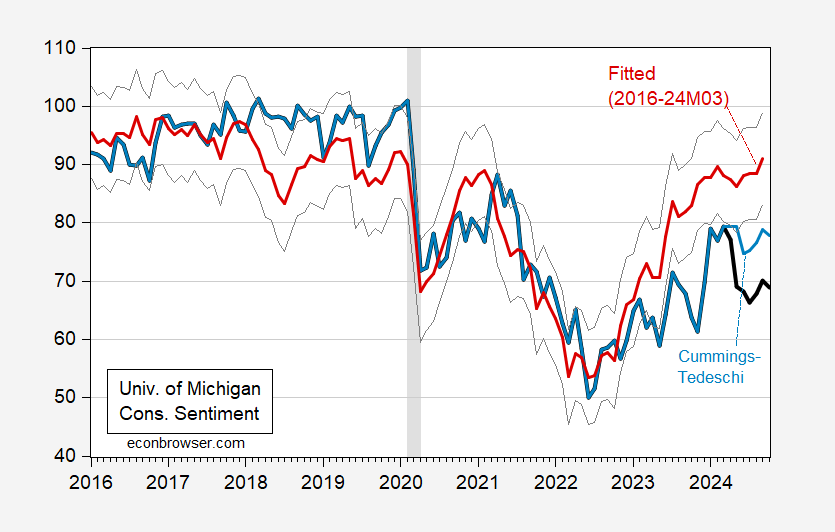

That being said, the switch in survey methods does not fully explain the gap between observables and sentiment. I estimate the regression over the 2016-2024M03 period, and predict out of sample for 2024M04-M10.

Figure 1: University of Michigan Consumer Sentiment (bold black), Cummings-Tedeschi adjusted series (light blue), fitted (red), +/- one standard error (gray lines). NBER defined peak-to-trough recession dates shaded gray. Source: U.Michigan via FRED, BriefingBook, NBER and author’s calculations.

So possibly less than half of the gap is accounted for by the change in survey methods.